realtime tracking with pan tilt camera

The human eye is highly skilled at tracking moving objects, a process that occurs effortlessly. This ability relies on the brain's processing power, the rapid response of extraocular muscles, and the light weight of the eyeball. In contrast, a small camera mounted on a servo is often too heavy and slow to replicate the eye's agility. To enable computers to track movement quickly, advancements in camera miniaturization have made small, easily manipulable cameras available. This project utilizes a first-person view (FPV) camera designed for model airplanes, mounted on servo motors that provide two degrees of freedom for aiming. Weighing only 32 grams, slightly more than a human eyeball, the assembly, in conjunction with a GPU-based tracking algorithm, allows for robust tracking of various patterns and objects with speed and stability. A demonstration video illustrates the camera's ability to follow a moving person. The setup includes a 420-line pan-tilt camera from Fat Shark, which rotates approximately 170° on the yaw axis and 90° on the pitch axis, producing composite video in PAL format. A 12V rechargeable Lithium battery powers the camera, with a voltage regulator supplying 5V for the camera and servos. Capacitors are added to stabilize voltage. The camera's video and servo cables connect to headers on the voltage regulator circuit, which also provides a 12V supply for the video transmitter. A USB frame grabber from StarTech captures the video for the host PC, supporting both NTSC and PAL formats. The video is downsampled for tracking efficiency, with a full resolution display after deinterlacing. An Arduino Diecimila generates control signals for the servos, receiving pulse widths over its serial port. The wireless RF link transmits servo angles up to 500 feet, with both transmitter and receiver connected to Arduinos using the Virtual Wire library.

The described camera tracking system leverages a lightweight FPV camera mounted on servo motors to achieve responsive movement tracking similar to human visual capabilities. The camera's compact design allows for easy integration into various applications, particularly in robotics and remote sensing. The dual-axis servo mechanism enables precise control over the camera's orientation, allowing it to follow dynamic subjects effectively.

The Fat Shark camera, with its 420-line resolution, provides a clear image suitable for tracking algorithms, while the use of PAL format ensures compatibility with various video processing systems. The 12V power supply, regulated down to 5V, ensures stable operation of both the camera and servos, crucial for maintaining performance during tracking tasks. The inclusion of capacitors before and after the voltage regulator enhances the system's reliability by filtering out voltage fluctuations that could affect the camera's operation.

The integration of a USB frame grabber facilitates the real-time processing of video data on a host PC, enabling advanced tracking algorithms to analyze the incoming video feed. The downsampling technique employed reduces the computational load while maintaining adequate video quality for tracking purposes. The Arduino Diecimila serves as the control hub, generating precise PWM signals for servo control based on the processed tracking data.

The wireless RF link enhances the system's versatility, allowing for remote operation up to 500 feet, making it suitable for applications where direct physical control is impractical. The use of the Virtual Wire library simplifies the implementation of the wireless communication, enabling seamless transmission of servo angle data.

Overall, this camera tracking system exemplifies the convergence of miniaturized technology, efficient power management, and advanced processing techniques, resulting in a robust solution for dynamic movement tracking in various electronic applications.The human eye is amazingly adept at tracking moving objects. The process is so natural to humans that it happens without any conscious effort. While this remarkable ability depends in part on the human brain`s immense processing power, the fast response of the extraocular muscles and the eyeball`s light weight are also vital. Even a small point an d shoot camera mounted on a servo is typically too heavy and slow to move with the agility of the human eye. How, then, can we give a computer the ability to track movement quickly and responsively Thanks to recent progress in camera miniaturization, small, easily manipulable cameras are now readily available.

In this project, we use a first person view (FPV) camera intended for use on model airplanes. The camera is mounted on servo motors which can aim the camera with two degrees of freedom. The entire assembly weighs only 32 grams, only slightly more than a typical human eyeball. Coupled with a GPU-based tracking algorithm, the FPV camera allows the computer to robustly track a wide array of patterns and objects with excellent speed and stability. The above video clip shows a short demonstration. We built a simple camera tracking system using the FPV camera. The video demonstrates how the tracking camera snaps to a person moving in front of it. We show both the view captured by the tracking camera (the smaller video), and the view from a different camera that shows the movement of the tracking camera (the larger video).

We used a 420-line pan-tilt camera manufactured by Fat Shark. The camera is mounted on two servo motors, which allow for about 170 ° of rotation on the yaw axis and 90 ° of rotation on the pitch axis. The camera produces composite video in PAL format. An NTSC version of the camera is available as well, but it was out of stock when we ordered our parts.

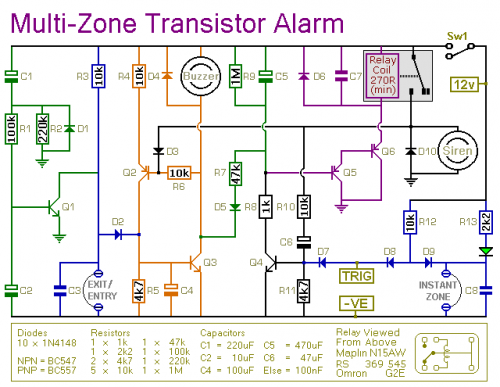

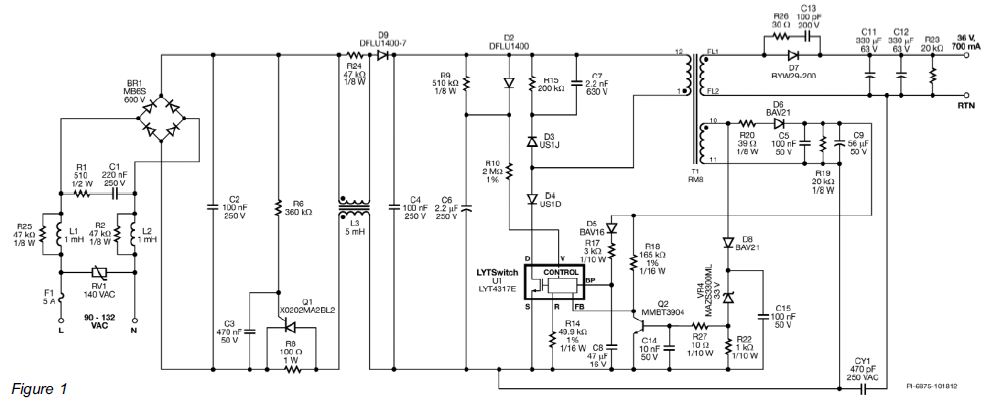

Because the video transmitter requires the 12 volts, we power the camera with a 12V rechargeable Lithium battery. We use a voltage regulator to provide 5 volts for the camera and the servos. We added capacitors before and after the regulator to eliminate any voltage fluctuations. Both the video and servo cables from the camera connect to headers on the voltage regulator circuit, which provide the regulated 5V power supply.

The servo control signals and the video output are passed through to a second set of headers, which connect to the frame grabber and the Arduino, respectively. The video output header additionally provides a 12V power supply for the video transmitter. We used a USB frame-grabber manufactured by StarTech to read the video into the host PC. The frame grabber supports both NTSC and PAL composite video, so the NTSC camera could be used without any hardware changes.

We used a video cable sold by Digital Products Company to connect the frame grabber to the video output header on the voltage regulator circuit. The cable also has a power jack, which provides 12 volts to the video transmitter. The frame-grabber provides 640 x 480 interlaced video at 25 FPS. For efficiency, we downsample the video to half resolution for tracking. Our downsampling filter discards the even lines to eliminate errors due to combing artifacts. We display the video at full resolution, after eliminating combing artifacts with a standard deinterlacing filter.

We used an Arduino Diecimila to generate the control signal for the servos. The Arduino receives the desired pulse widths for the servos over its serial port. Each pulse width is encoded as a 16-bit integer, with 1 bit reserved to select one of the two servos. We use the servo library included with the Arduino software to generate the PWM signals. The wireless RF link transmits the servo angles digitally, with a range of up to 500 feet. Both the transmitter and receiver connect to Arduinos running the Virtual Wire library. The transmitter Arduino (connected to the host PC) broadcasts each 2-byte angle, followed by a 🔗 External reference

The described camera tracking system leverages a lightweight FPV camera mounted on servo motors to achieve responsive movement tracking similar to human visual capabilities. The camera's compact design allows for easy integration into various applications, particularly in robotics and remote sensing. The dual-axis servo mechanism enables precise control over the camera's orientation, allowing it to follow dynamic subjects effectively.

The Fat Shark camera, with its 420-line resolution, provides a clear image suitable for tracking algorithms, while the use of PAL format ensures compatibility with various video processing systems. The 12V power supply, regulated down to 5V, ensures stable operation of both the camera and servos, crucial for maintaining performance during tracking tasks. The inclusion of capacitors before and after the voltage regulator enhances the system's reliability by filtering out voltage fluctuations that could affect the camera's operation.

The integration of a USB frame grabber facilitates the real-time processing of video data on a host PC, enabling advanced tracking algorithms to analyze the incoming video feed. The downsampling technique employed reduces the computational load while maintaining adequate video quality for tracking purposes. The Arduino Diecimila serves as the control hub, generating precise PWM signals for servo control based on the processed tracking data.

The wireless RF link enhances the system's versatility, allowing for remote operation up to 500 feet, making it suitable for applications where direct physical control is impractical. The use of the Virtual Wire library simplifies the implementation of the wireless communication, enabling seamless transmission of servo angle data.

Overall, this camera tracking system exemplifies the convergence of miniaturized technology, efficient power management, and advanced processing techniques, resulting in a robust solution for dynamic movement tracking in various electronic applications.The human eye is amazingly adept at tracking moving objects. The process is so natural to humans that it happens without any conscious effort. While this remarkable ability depends in part on the human brain`s immense processing power, the fast response of the extraocular muscles and the eyeball`s light weight are also vital. Even a small point an d shoot camera mounted on a servo is typically too heavy and slow to move with the agility of the human eye. How, then, can we give a computer the ability to track movement quickly and responsively Thanks to recent progress in camera miniaturization, small, easily manipulable cameras are now readily available.

In this project, we use a first person view (FPV) camera intended for use on model airplanes. The camera is mounted on servo motors which can aim the camera with two degrees of freedom. The entire assembly weighs only 32 grams, only slightly more than a typical human eyeball. Coupled with a GPU-based tracking algorithm, the FPV camera allows the computer to robustly track a wide array of patterns and objects with excellent speed and stability. The above video clip shows a short demonstration. We built a simple camera tracking system using the FPV camera. The video demonstrates how the tracking camera snaps to a person moving in front of it. We show both the view captured by the tracking camera (the smaller video), and the view from a different camera that shows the movement of the tracking camera (the larger video).

We used a 420-line pan-tilt camera manufactured by Fat Shark. The camera is mounted on two servo motors, which allow for about 170 ° of rotation on the yaw axis and 90 ° of rotation on the pitch axis. The camera produces composite video in PAL format. An NTSC version of the camera is available as well, but it was out of stock when we ordered our parts.

Because the video transmitter requires the 12 volts, we power the camera with a 12V rechargeable Lithium battery. We use a voltage regulator to provide 5 volts for the camera and the servos. We added capacitors before and after the regulator to eliminate any voltage fluctuations. Both the video and servo cables from the camera connect to headers on the voltage regulator circuit, which provide the regulated 5V power supply.

The servo control signals and the video output are passed through to a second set of headers, which connect to the frame grabber and the Arduino, respectively. The video output header additionally provides a 12V power supply for the video transmitter. We used a USB frame-grabber manufactured by StarTech to read the video into the host PC. The frame grabber supports both NTSC and PAL composite video, so the NTSC camera could be used without any hardware changes.

We used a video cable sold by Digital Products Company to connect the frame grabber to the video output header on the voltage regulator circuit. The cable also has a power jack, which provides 12 volts to the video transmitter. The frame-grabber provides 640 x 480 interlaced video at 25 FPS. For efficiency, we downsample the video to half resolution for tracking. Our downsampling filter discards the even lines to eliminate errors due to combing artifacts. We display the video at full resolution, after eliminating combing artifacts with a standard deinterlacing filter.

We used an Arduino Diecimila to generate the control signal for the servos. The Arduino receives the desired pulse widths for the servos over its serial port. Each pulse width is encoded as a 16-bit integer, with 1 bit reserved to select one of the two servos. We use the servo library included with the Arduino software to generate the PWM signals. The wireless RF link transmits the servo angles digitally, with a range of up to 500 feet. Both the transmitter and receiver connect to Arduinos running the Virtual Wire library. The transmitter Arduino (connected to the host PC) broadcasts each 2-byte angle, followed by a 🔗 External reference