Sampling Theorem

1. Definition and Mathematical Formulation

Definition and Mathematical Formulation

The Sampling Theorem, also known as the Nyquist-Shannon Theorem, establishes the conditions under which a continuous-time signal can be perfectly reconstructed from its discrete samples. The theorem states that a bandlimited signal with no frequency components above B Hz can be exactly reconstructed if sampled at a rate fs greater than twice B:

This minimum required sampling rate, 2B, is called the Nyquist rate. Sampling below this rate leads to aliasing, where higher frequency components fold back into the lower frequency spectrum, causing irreversible distortion.

Mathematical Derivation

Consider a continuous-time signal x(t) with Fourier transform X(f) that is bandlimited to B Hz:

The sampled signal xs(t) is obtained by multiplying x(t) with an impulse train s(t) with period Ts = 1/fs:

In the frequency domain, this multiplication becomes a convolution:

where S(f) is the Fourier transform of the sampling function. The spectrum of the sampled signal consists of copies of X(f) centered at integer multiples of fs.

Reconstruction Condition

For perfect reconstruction, the spectral copies must not overlap. This requires:

When this condition is met, the original signal can be recovered by applying an ideal low-pass filter with cutoff frequency fc satisfying B < fc < fs - B.

Practical Implications

In real-world applications:

- Audio CD quality uses fs = 44.1 kHz to cover human hearing up to 20 kHz

- Digital oscilloscopes typically sample at 5-10 times the signal bandwidth

- Anti-aliasing filters are essential to enforce bandlimiting before sampling

Nyquist Rate and Nyquist Frequency

The Nyquist rate and Nyquist frequency are fundamental concepts in signal processing that define the minimum sampling requirements for perfect signal reconstruction. These terms originate from the work of Harry Nyquist and Claude Shannon, who formalized the mathematical foundation of sampling theory.

Mathematical Definition

For a bandlimited signal x(t) with maximum frequency fmax, the Nyquist rate is defined as:

This represents the minimum sampling frequency required to avoid aliasing. The Nyquist frequency, conversely, is half the sampling rate:

These two quantities form complementary boundaries in sampling systems - one being the minimum acceptable sampling rate, the other being the maximum representable frequency for a given sampling rate.

Physical Interpretation

The factor of 2 in the Nyquist rate arises from the need to capture both the positive and negative frequency components of a signal's Fourier transform. When sampling at exactly the Nyquist rate:

- The signal spectrum becomes periodic with period fs

- Adjacent spectral replicas touch at fs/2

- Any frequency component above fs/2 will alias into the baseband

Practical Considerations

In real-world applications, several factors necessitate sampling above the theoretical Nyquist rate:

- Anti-aliasing filters require transition bands

- Non-ideal reconstruction filters benefit from oversampling

- Quantization noise shaping techniques exploit higher rates

A common engineering practice is to sample at 2.2-2.5 times fmax to accommodate these practical constraints. In high-performance systems like digital oscilloscopes, sampling rates often exceed 10 times the Nyquist rate to capture signal details with minimal distortion.

Historical Context

Nyquist's original 1928 work focused on telegraph transmission speeds, establishing that a bandwidth-limited channel could carry at most 2B independent pulses per second. Shannon later generalized this in his 1948 sampling theorem, connecting it to information theory and establishing the modern interpretation.

Modern Applications

Contemporary systems leverage these principles in:

- Software-defined radio (SDR) architectures

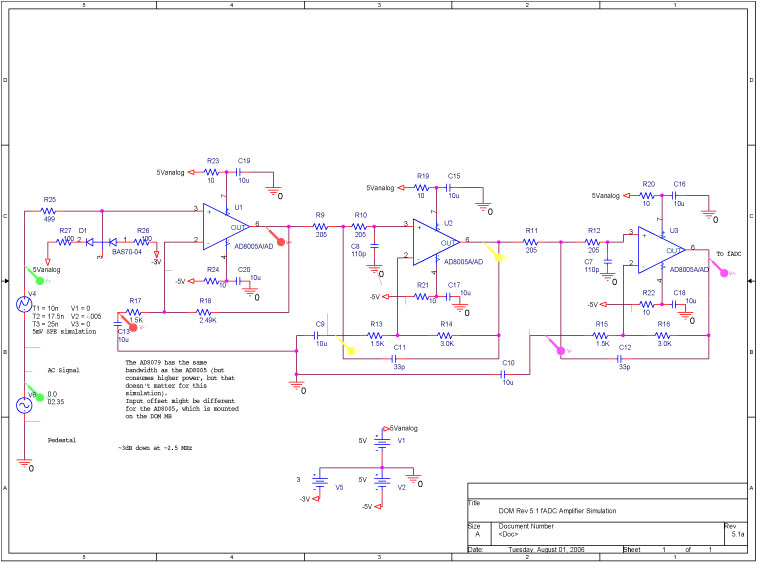

- Oversampled analog-to-digital converters (ADCs)

- Compressed sensing techniques

- Digital communication systems

where the last term shows the SNR improvement from oversampling by a factor of fs/(2B).

1.3 Aliasing and Its Implications

Aliasing occurs when a signal is sampled at a rate insufficient to capture its highest frequency components, violating the Nyquist criterion. If a signal with frequency f is sampled at a rate fs where fs < 2f, higher-frequency components are misrepresented as lower frequencies in the reconstructed signal. This phenomenon arises from spectral overlap in the frequency domain, where copies of the original spectrum centered at multiples of fs interfere with the baseband spectrum.

Mathematical Derivation of Aliasing

Consider a sinusoidal signal x(t) = cos(2Ï€f0t) sampled at intervals Ts = 1/fs. The sampled signal is:

If f0 > fs/2, the frequency f0 is indistinguishable from a lower frequency falias = |f0 - kfs|, where k is an integer such that falias ≤ fs/2. This is evident from the periodicity of the discrete-time Fourier transform (DTFT):

Practical Implications of Aliasing

Aliasing introduces irreversible distortions in sampled systems:

- Telecommunications: Undersampled channels exhibit crosstalk, where high-frequency noise folds into the signal band.

- Audio Processing: Ultrasonic frequencies (>20 kHz) alias into audible ranges, causing artifacts in digital recordings.

- Medical Imaging: MRI and CT scans require precise anti-aliasing filters to prevent false structural interpretations.

Visualizing Aliasing in the Frequency Domain

The Nyquist criterion ensures that spectral replicas in the sampled signal's Fourier transform do not overlap. When violated, high-frequency components (f > fs/2) "fold back" around fs/2, creating aliases. For a signal with bandwidth B, the Nyquist rate is fs = 2B to prevent overlap.

Mitigation Strategies

Anti-aliasing techniques include:

- Analog Prefiltering: A low-pass filter with cutoff fc ≤ fs/2 attenuates frequencies above Nyquist before sampling.

- Oversampling: Increasing fs reduces the risk of spectral overlap, relaxing filter requirements.

- Dithering: Adding controlled noise randomizes quantization errors, dispersing aliasing artifacts.

2. Anti-Aliasing Filters

2.1 Anti-Aliasing Filters

Anti-aliasing filters are low-pass filters applied before sampling to ensure that no frequency components above the Nyquist frequency (fN = fs/2) remain in the signal. Without such filtering, higher-frequency components alias into the baseband, distorting the sampled signal irreversibly.

Mathematical Foundation

The necessity of anti-aliasing filters arises from the Fourier-domain representation of sampling. Consider a continuous-time signal x(t) with bandwidth B. The sampled signal xs(t) is given by:

where Ts = 1/fs is the sampling interval. The Fourier transform of xs(t) is:

Aliasing occurs when X(f - k fs) for k ≠0 overlaps with X(f) in the baseband [-fN, fN]. An ideal anti-aliasing filter eliminates all frequency components above fN:

Practical Implementation

Real-world anti-aliasing filters cannot achieve the ideal brick-wall response. Instead, they exhibit a transition band between the passband and stopband. Key design parameters include:

- Cutoff frequency (fc): Typically set slightly below fN to account for the transition band.

- Stopband attenuation: Must be sufficient to suppress aliasing components below the noise floor.

- Roll-off rate: Determines the steepness of the transition band (e.g., Butterworth, Chebyshev, or elliptic filters).

The required filter order N for a given stopband attenuation As (dB) and transition bandwidth Δf = fstop - fc is approximated for a Butterworth filter as:

Trade-offs and Design Considerations

Anti-aliasing filters introduce phase distortion and group delay, which may be critical in time-sensitive applications. Higher-order filters improve stopband attenuation but exacerbate these effects. Multirate sampling or oversampling can relax filter requirements by increasing fN.

In high-speed systems, switched-capacitor filters or active RC filters are common. For precision applications, finite impulse response (FIR) filters provide linear phase response at the cost of higher computational complexity.

Applications

Anti-aliasing filters are ubiquitous in:

- Digital audio: Ensuring fidelity in analog-to-digital conversion (e.g., 44.1 kHz sampling with a 20 kHz cutoff).

- Medical imaging: Preventing artifacts in MRI or ultrasound signal acquisition.

- Software-defined radio: Isolating channels before downsampling.

2.2 Sampling in Real-World Systems

In practical systems, the ideal sampling conditions prescribed by the Nyquist-Shannon theorem are often violated due to non-idealities in hardware and signal conditions. Understanding these deviations is critical for designing robust sampling systems.

Non-Ideal Sampling Effects

Real-world sampling introduces several imperfections not accounted for in the idealized model:

- Aperture uncertainty (jitter): Variations in the sampling instant caused by clock instability. For a signal with bandwidth B, the SNR degradation due to jitter σt is:

- Finite acquisition time: Non-zero aperture width causes amplitude attenuation at higher frequencies. The frequency response is:

where Ï„ is the aperture time.

- Quantization noise: For an N-bit ADC, the theoretical SNR is:

Anti-Aliasing Filter Design Constraints

Practical anti-aliasing filters must balance:

- Transition band width (dictated by sampling rate and signal bandwidth)

- Passband ripple (affecting signal fidelity)

- Stopband attenuation (controlling aliasing artifacts)

- Phase linearity (critical for time-domain applications)

The required filter order n for given specifications can be estimated using:

where As is stopband attenuation, Ap is passband ripple, and ωs, ωp are the stopband and passband frequencies.

Oversampling and Noise Shaping

Modern systems often employ oversampling techniques to relax anti-aliasing requirements:

- Oversampling ratio (OSR): Defined as fs/(2B), where B is the signal bandwidth. Each doubling of OSR improves SNR by 3 dB (0.5 bit).

- Sigma-delta modulation: Combines oversampling with noise shaping to push quantization noise out of band. The noise transfer function for a first-order modulator is:

Practical Implementation Challenges

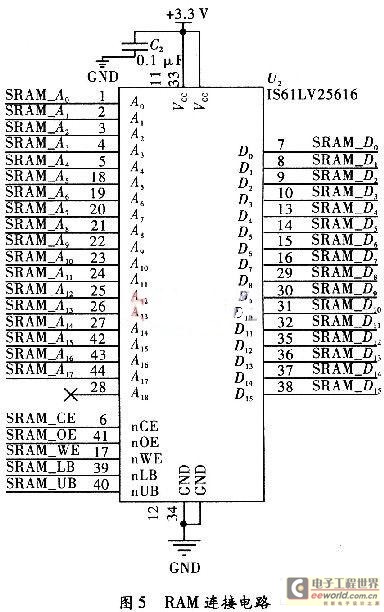

Key considerations in physical sampling systems include:

- Clock distribution networks with sub-picosecond jitter for high-speed ADCs

- Analog front-end settling time requirements

- PCB layout considerations for minimizing crosstalk and ground bounce

- Thermal noise limits in high-resolution converters

For a 16-bit ADC sampling at 1 MSPS, the allowable thermal noise is approximately:

where VFSR is the full-scale range (typically 2-5V).

2.3 Quantization and Bit Depth

Quantization is the process of mapping continuous-amplitude sampled values to a finite set of discrete levels. The number of possible levels is determined by the bit depth N, which defines the resolution of the digital representation. For an N-bit system, the number of quantization levels L is given by:

The quantization step size Q, representing the smallest discernible amplitude difference, depends on the full-scale range VFSR:

Quantization Error and Signal-to-Noise Ratio

Quantization introduces an error bounded by ±Q/2, assuming uniform quantization and rounding. This error manifests as quantization noise, which can be modeled as a uniformly distributed random variable with power:

For a sinusoidal input signal with peak-to-peak amplitude Vpp spanning the full scale, the signal power Ps is:

The signal-to-quantization-noise ratio (SQNR) in decibels is then:

Practical Considerations in Bit Depth Selection

Higher bit depths reduce quantization noise but increase data rate and processing complexity. The choice involves trade-offs:

- Audio systems: 16–24 bits (96–144 dB dynamic range)

- Scientific instrumentation: 24–32 bits for high-precision measurements

- Image processing: 8–16 bits per channel depending on color depth requirements

Non-uniform quantization (e.g., μ-law or A-law companding) is sometimes employed in telephony to improve perceived SNR for low-amplitude signals while maintaining a limited bit depth.

Dithering Techniques

When quantizing signals with amplitudes comparable to Q, adding controlled noise (dither) before quantization can:

- Prevent quantization-induced distortion patterns

- Improve perceived resolution through noise shaping

- Enable recovery of sub-LSB information in oversampled systems

The effectiveness of dither depends on its probability density function (PDF) and correlation properties. Common dither types include:

- Rectangular PDF dither: Uniform distribution over [-Q/2, Q/2]

- Triangular PDF dither: Convolution of two rectangular PDFs

- High-pass shaped dither:

$$ S_{nn}(f) = k(1 - \cos(2\pi fT_s)) $$

3. The Role of the Reconstruction Filter

3.1 The Role of the Reconstruction Filter

The reconstruction filter is a critical component in the practical implementation of the sampling theorem. Its primary function is to recover the original continuous-time signal from its sampled version by eliminating spectral replicas introduced during the sampling process. Without an ideal reconstruction filter, aliasing artifacts would corrupt the recovered signal, violating the Nyquist-Shannon criterion.

Mathematical Foundation

When a signal x(t) is sampled at intervals Ts, the resulting discrete-time signal xs(t) can be expressed as:

In the frequency domain, this multiplication becomes convolution, producing spectral replicas centered at integer multiples of the sampling frequency fs = 1/Ts:

The ideal reconstruction filter Hr(f) is a perfect low-pass filter with cutoff frequency fc = fs/2 and unity gain in the passband:

Practical Implementation Challenges

While the theoretical reconstruction filter has a brick-wall response, real-world filters must contend with several non-ideal characteristics:

- Transition bandwidth: Finite roll-off between passband and stopband

- Passband ripple: Non-uniform gain within the desired frequency range

- Group delay: Frequency-dependent time delay of signal components

- Stopband attenuation: Limited rejection of aliased components

Modern digital-to-analog converters typically employ oversampling combined with analog reconstruction filters to relax the requirements on the analog filter's steepness. A common approach uses:

- Digital interpolation filters to increase the effective sampling rate

- Multi-bit sigma-delta modulation for noise shaping

- Low-order analog anti-imaging filters

Time-Domain Interpretation

The reconstruction process can be viewed as convolution with the filter's impulse response. For the ideal filter, this corresponds to sinc interpolation:

where x[n] are the sample values and the sinc function represents the ideal reconstruction filter's impulse response. This formulation demonstrates the non-causal nature of perfect reconstruction - requiring samples from both past and future.

Design Trade-offs in Practical Systems

Engineers must balance several competing factors when implementing reconstruction filters:

| Parameter | Impact | Typical Specification |

|---|---|---|

| Passband flatness | Affects signal amplitude accuracy | ±0.1 dB |

| Stopband attenuation | Determines alias rejection | >60 dB |

| Phase linearity | Preserves waveform shape | <1° deviation |

| Group delay variation | Affects time alignment | <1 sample period |

In high-performance audio systems, reconstruction filters may achieve 24-bit resolution with passbands extending to 20 kHz and stopbands beginning at just 22 kHz, requiring transition bands as narrow as 2 kHz with >120 dB attenuation.

Ideal vs. Practical Reconstruction

In the context of the Sampling Theorem, reconstruction refers to the process of converting a discrete-time signal back into a continuous-time signal. While the ideal reconstruction is mathematically precise, practical implementations introduce unavoidable constraints that affect signal fidelity.

Ideal Reconstruction

The ideal reconstruction process is derived directly from the Whittaker-Shannon interpolation formula:

where x[n] are the sampled values, T is the sampling interval, and sinc(x) = sin(Ï€x)/(Ï€x). This formula assumes:

- An infinite number of samples

- Perfectly bandlimited original signal

- Ideal sinc interpolation kernels

- No quantization error

The frequency response of ideal reconstruction is a perfect brick-wall lowpass filter with cutoff at the Nyquist frequency fs/2:

Practical Reconstruction Challenges

Real-world reconstruction systems face several fundamental limitations:

1. Finite Duration Sinc Approximation

Practical systems must truncate the infinite sinc function to a finite number of sidelobes. This truncation causes:

- Gibbs phenomenon (ringing artifacts) near discontinuities

- Passband ripple in the frequency domain

- Incomplete suppression of spectral images

2. Zero-Order Hold (ZOH) Effects

Most digital-to-analog converters (DACs) implement a zero-order hold, which convolves the ideal impulses with a rectangular pulse of width T. This introduces:

- An inherent lowpass filtering effect with magnitude response |HZOH(f)| = T|sinc(fT)|

- Attenuation near the Nyquist frequency (~3.92 dB at fs/2)

- Phase distortion due to the linear phase term e-jπfT

3. Anti-Imaging Filter Imperfections

The post-DAC reconstruction filter must remove spectral images while preserving the baseband. Practical filters exhibit:

- Transition bands instead of sharp cutoffs

- Passband droop (typically 0.1-0.5 dB)

- Stopband attenuation limited to 60-120 dB

- Group delay variations

Quantitative Comparison

The signal-to-reconstruction-error ratio (SRER) quantifies reconstruction quality:

Typical values for common reconstruction methods:

| Method | SRER (dB) | Complexity |

|---|---|---|

| Ideal sinc | ∞ | Infinite |

| 8-tap windowed sinc | 72-85 | Moderate |

| Zero-order hold | 55-65 | Minimal |

Advanced Reconstruction Techniques

Modern systems employ several compensation methods:

- Inverse Sinc Compensation: Digital pre-emphasis filter with response 1/sinc(fT) to counteract ZOH attenuation

- Oversampling: Increasing fs relaxes anti-imaging filter requirements

- Multirate Filtering: Polyphase filters for efficient high-order interpolation

- Sigma-Delta Modulation: Noise shaping moves quantization error out of band

In high-fidelity audio systems (e.g., 192 kHz DACs), these techniques can achieve SRER > 110 dB across the 20-20 kHz band.

3.3 Zero-Order Hold and Its Effects

The zero-order hold (ZOH) is a mathematical model used in signal processing to reconstruct a continuous-time signal from its discrete samples. It operates by holding each sample value constant until the next sample is received, resulting in a piecewise-constant approximation of the original signal. While simple to implement, the ZOH introduces specific distortions and spectral effects that must be accounted for in high-precision systems.

Mathematical Representation

The ZOH can be modeled as a linear time-invariant (LTI) system with an impulse response given by:

where Ts is the sampling period. The frequency response of the ZOH is obtained by taking the Fourier transform of hZOH(t):

The sinc function (sin(πx)/(πx)) introduces amplitude attenuation, while the exponential term represents a linear phase delay. This frequency response shapes the reconstructed signal’s spectrum, attenuating higher frequencies and introducing phase distortion.

Spectral Effects and Aliasing

The ZOH acts as a low-pass filter with a non-ideal frequency response. Its magnitude rolls off with increasing frequency, following the sinc envelope:

Key observations include:

- Attenuation at Nyquist frequency (f = fs/2): The ZOH attenuates the signal by approximately 3.92 dB (a factor of 2/Ï€) at the Nyquist limit.

- Images and replicas: The ZOH does not eliminate higher-order spectral replicas introduced by sampling. These replicas, though attenuated, can alias back into the baseband if not filtered.

- Phase distortion: The linear phase term e−jπfTs introduces a group delay of Ts/2, which must be compensated in time-critical applications.

Practical Implications

In real-world systems, the ZOH is commonly implemented in digital-to-analog converters (DACs). Its effects are mitigated through:

- Oversampling: Increasing the sampling rate reduces the attenuation within the band of interest and pushes replicas further out in frequency.

- Reconstruction filtering: A post-DAC analog low-pass filter is used to remove residual high-frequency components.

- Equalization: Inverse filtering can compensate for the sinc roll-off in systems requiring flat frequency response.

Comparison with Higher-Order Holds

While the ZOH is computationally simple, higher-order holds (e.g., first-order or polynomial interpolation) provide smoother reconstruction at the cost of increased complexity. The ZOH remains dominant in applications where latency and computational efficiency are critical, such as real-time control systems.

4. Digital Audio Processing

4.1 Digital Audio Processing

The Sampling Theorem

The Nyquist-Shannon Sampling Theorem establishes the fundamental criterion for accurately reconstructing a continuous-time signal from its discrete samples. For a bandlimited signal x(t) with no frequency components above fmax, the sampling frequency fs must satisfy:

This ensures that the original signal can be perfectly reconstructed from its samples. The term 2fmax is called the Nyquist rate. Sampling below this rate introduces aliasing, where higher frequencies fold back into the baseband, corrupting the signal.

Mathematical Derivation

Consider a continuous-time signal x(t) with Fourier transform X(f) such that X(f) = 0 for |f| ≥ fmax. Sampling x(t) at intervals Ts = 1/fs yields a discrete sequence x[n] = x(nTs). The spectrum of the sampled signal xs(t) is given by:

If fs > 2fmax, the spectral replicas do not overlap, and the original signal can be recovered using an ideal low-pass filter with cutoff fs/2:

where sinc(x) = sin(Ï€x)/(Ï€x) is the interpolation kernel.

Aliasing and Anti-Aliasing Filters

When fs ≤ 2fmax, spectral overlap occurs, causing aliasing. To prevent this, an anti-aliasing filter must be applied before sampling, attenuating frequencies above fs/2. Practical filters (e.g., Butterworth, Chebyshev) introduce a transition band, requiring a slightly higher fs than the Nyquist rate.

Practical Considerations in Digital Audio

In audio applications, the human hearing range (~20 Hz to 20 kHz) dictates fmax. CD-quality audio uses fs = 44.1 kHz, exceeding the Nyquist rate for 20 kHz signals. Oversampling (e.g., 96 kHz or 192 kHz) reduces anti-aliasing filter requirements and improves noise shaping in delta-sigma converters.

Quantization and Bit Depth

Sampling also involves quantization, mapping continuous amplitudes to discrete levels. For N-bit resolution, the signal-to-quantization-noise ratio (SQNR) is:

CD audio uses 16-bit quantization (SQNR ≈ 98 dB), while high-resolution formats employ 24 bits (≈146 dB).

4.2 Telecommunications and Data Transmission

The sampling theorem, formulated by Harry Nyquist and later proven by Claude Shannon, is fundamental in modern telecommunications. It states that a continuous-time signal x(t) with no frequency components above B Hz can be perfectly reconstructed from its samples if sampled at a rate fs ≥ 2B. This minimum sampling rate, 2B, is known as the Nyquist rate.

Mathematical Derivation of the Sampling Theorem

Consider a bandlimited signal x(t) with Fourier transform X(f) satisfying:

When sampled at intervals Ts = 1/fs, the sampled signal xs(t) is:

The Fourier transform of the sampled signal becomes a periodic repetition of X(f) at intervals of fs:

For perfect reconstruction, the spectral replicas must not overlap. This requires:

Practical Considerations in Telecommunications

In real-world systems, several factors necessitate sampling above the Nyquist rate:

- Anti-aliasing filters cannot have ideal brick-wall characteristics, requiring a guard band

- Quantization noise and jitter affect reconstruction accuracy

- Non-ideal interpolation in digital-to-analog converters introduces reconstruction errors

Modern communication standards typically use oversampling ratios of 2.5-4 times the Nyquist rate. For example:

| Application | Bandwidth (B) | Typical Sampling Rate (fs) |

|---|---|---|

| Voice Telephony | 3.4 kHz | 8 kHz (2.35× oversampling) |

| CD Audio | 20 kHz | 44.1 kHz (2.205× oversampling) |

| 5G NR | 100 MHz | 245.76 MHz (2.4576× oversampling) |

Multirate Signal Processing in Modern Systems

Contemporary telecommunications employ sophisticated sampling techniques:

- Undersampling (bandpass sampling) for RF signals where fs can be less than 2fmax when certain conditions are met

- Sigma-delta modulation combines oversampling with noise shaping to achieve high resolution

- Compressed sensing enables sub-Nyquist sampling for sparse signals

The reconstruction process in digital receivers typically involves:

where sinc(x) = sin(Ï€x)/(Ï€x) is the ideal interpolation kernel.

Impact on Data Transmission Systems

The sampling theorem directly determines key parameters in digital communication:

- Symbol rate is fundamentally limited by channel bandwidth via the Nyquist criterion

- Intersymbol interference (ISI) is minimized through proper pulse shaping satisfying the Nyquist ISI criterion

- Channel capacity in Shannon's theorem builds upon the sampling theorem's foundation

In optical communications, coherent receivers sample the electric field at the Nyquist rate of the signal bandwidth, not the optical frequency, enabling advanced modulation formats like QAM.

4.3 Medical Imaging and Signal Processing

Nyquist Criterion in Medical Signal Acquisition

The sampling theorem, as formalized by Nyquist and Shannon, states that a continuous signal must be sampled at a rate exceeding twice its highest frequency component to avoid aliasing. In medical imaging, this criterion dictates the minimum sampling rate for signals such as:

- Electrocardiograms (ECGs)

- Magnetic Resonance Imaging (MRI) k-space data

- Ultrasound echo signals

For a signal with bandwidth B, the Nyquist rate fs must satisfy:

Practical Challenges in Medical Sampling

While the theorem provides a theoretical lower bound, medical applications often require higher sampling rates due to:

- Non-ideal sensor responses requiring anti-aliasing filters with roll-off regions

- Transient phenomena in physiological signals needing temporal oversampling

- Noise considerations where sampling above Nyquist improves SNR

Case Study: MRI K-Space Sampling

In MRI, the Fourier transform relationship between k-space samples and image space creates unique sampling constraints. The Nyquist criterion translates to:

where Δk is the sampling interval in k-space and FOV is the desired field of view. Violating this condition manifests as wraparound artifacts in the reconstructed image.

Advanced Sampling Techniques

Modern medical systems employ sophisticated sampling strategies to overcome Nyquist limitations:

| Technique | Application | Sampling Advantage |

|---|---|---|

| Compressed Sensing | CT/MRI | Sub-Nyquist acquisition with sparsity constraints |

| Non-Uniform Sampling | MRS | Variable density sampling for enhanced resolution |

Quantitative Example: Ultrasound Sampling

Consider a 5 MHz ultrasound transducer with 2 MHz bandwidth. The baseband Nyquist rate would be 4 MS/s, but practical systems typically sample at 10-20 MS/s to:

- Accommodate harmonic imaging components

- Provide oversampling for digital beamforming

- Enable advanced signal processing

Anti-Aliasing in Medical Devices

Medical equipment implements multi-stage anti-aliasing protection:

- Analog filtering (Butterworth/Bessel) at the sensor interface

- Oversampled ADC conversion

- Digital decimation filtering

This cascade ensures compliance with IEC 60601-2-25 standards for diagnostic equipment.

5. Key Research Papers and Books

5.1 Key Research Papers and Books

- PDF Advances in Sampling Theory and Techniques - SPIE — 1.1 A Historical Perspective of Sampling: From Ancient Mosaics to Computational Imaging 1 1.2 Book Overview 5 Part I: Signal Sampling 9 2 Sampling Theorems 11 2.1 Kotelnikov-Shannon Sampling Theorem: Sampling Band-Limited 1D Signals 11 2.2 Sampling 1D Band-Pass Signals 14 2.3 Sampling Band-Limited 2D Signals; Optimal Regular Sampling Lattices 16

- The Sampling Theorem | Handbook of Fourier Analysis & Its Applications ... — 1765 - Lagrange derives the sampling theorem for the special case of periodic bandlimited signals [].1841 - Cauchy's recognition of the Nyquist rate [] 1.1897 - Borel's recognition of the feasibility of regaining a bandlimited signal from its samples [].1915 - E.T. Whittaker's publishes his highly cited paper on the cardinal series [].1928 - H. Nyquist establishes the time-bandwidth ...

- PDF SamplingTheory BeyondBandlimitedSystems - Cambridge University Press ... — Covering the fundamental mathematical underpinnings together with key principles and applications, this book provides a comprehensive guide to the theory and practice of ... 1.1 Standard sampling 2 1.2 Beyond bandlimited signals 5 1.3 Outline and outlook 6 ... 4.2.1 The Shannon-Nyquist theorem 98 4.2.2 Sampling by modulation 100

- PDF Sampling and Quantization - Princeton University — SAMPLING AND QUANTIZATION 0 0.5 1 1.5 2 2.5 −1 −0.8 −0.6 −0.4 −0.2 0 0.2 0.4 0.6 0.8 1 Sampling where ω s >> ω Figure 5.6: Sampling a sinusoid at a high rate. To begin, consider a sinusoid x(t)=sin(ωt) where the frequency ω is not too large. As before, we assume ideal sampling with one sample every T seconds. In

- Sampling and Reconstruction - SpringerLink — The sampling theorem is a remarkable result, as it tells us that exact reconstruction of a continuous function is possible from a discrete set of sampled values; the assumption that the signal is band-limited is key here, and so is the choice of the sampling frequency. The sampling theorem is often called the Nyquist-Shannon Sampling Theorem ...

- Sampling of Signals and Discrete Mathematical Methods — The mathematics of sampling was first discovered by Whittaker [] in 1915.As a mathematician, he did not realise the engineering implications of the theory however and it was left to two others, namely a Russian Kotelnikov [] in 1933 and independently by an American Claude Shannon [] in 1948.The theory considers ideal sampling, that is samples that are obtained instantaneously by a unit impulse.

- The Sampling Theorem and Several Equivalent Results in Analysis — the Whittaker±Kotel'nikov±Shannon sampling theorem) and other funda- mental theorems of real and complex analysis such as Poisson's summation formula and Cauchy's integral formula (see, e.g., [6 ...

- Advances in Sampling Theory and Techniques - ResearchGate — Moiré effect in sampling and reconstruction of a sinusoidal signal: (a) test signal of frequency f 0 ¼ 0.4 (fraction of the sampling baseband width) and this signal sampled and reconstructed; (b ...

- PDF CHAPTER 5: FUNDAMENTALS OF SAMPLED DATA SYSTEMS - Analog — fundamentals of sampling theory. SECTION 5.1: CODING AND QUANTIZING Analog-to-digital converters (ADCs) translate analog measurements, which are characteristic of most phenomena in the "real world," to digital language, used in information processing, computing, data transmission, and control systems. Digital-to-

- PDF AN-236An Introduction to the Sampling Theorem - Texas Instruments — requires sampling at greater than 4Kc to preserve and recover the waveform exactly. The consequences of sampling a signal at a rate below its highest frequency component results in a phenomenon known as aliasing. This concept results in a frequency mistakenly taking on the identity of an entirely different frequency when recovered.

5.2 Online Resources and Tutorials

- Unit 5 | unit 5 the sampling theorem - Goseeko — 5.1 The sampling theorem and its implications- spectra of sampled signals Sampling theorem states that for a continuous form of a time-variant signal it can be represented in the discrete form of a signal with help of samples and the sampled (discrete) signal can be recovered to original form when the sampling signal frequency Fs having the greater frequency value than or equal to the input ...

- 5.2.1. The Sampling Theorem — Digital Signal Processing — 5.2.1. The Sampling Theorem We are interested in the relation between x[n] x [n] and x(t) x (t) where x[n] = x(nTs) x [n] = x (n T s). However we want our sampled signal to be represented as CT signal as well. In that case we can compare the CT Fourier transform of x(t) x (t) with the Fourier transform of its sampled version and see wether we lost information in the sampling process. In order ...

- 5.2. Sampling — Digital Signal Processing — 5.2. Sampling So far CT and DT signals are treated more or less independently of each other. In most practical applications of discrete time signal processing the signal x[t] x [t] finds its origin in some continuous time signal x(t) x (t). The most often used way to make a DT signal out of a CT signal is called sampling. We assume the sampling to be equidistant in time. In that case sampling ...

- 5.3.1. The Sampling Theorem — Signal Processing 1.1 documentation — 5.3.1. The Sampling Theorem ¶ We are interested in the relation between x[n] x [n] and x(t) x (t) where x[n] = x(nT s) x [n] = x (n T s). However we want our sampled signal to be represented as CT signal as well. In that case we can compare the CT Fourier transform of x(t) x (t) with the Fourier transform of its sampled version and see wether we lost information in the sampling process. In ...

- PDF Microsoft Word - EDCh 5 sampling theory.doc - Analog — SECTION 5.2: SAMPLING THEORY This section discusses the basics of sampling theory. A block diagram of a typical real-time sampled data system is shown in Figure 5.23.

- Chapter 5: Sampling and Quantization - studylib.net — Chapter 5 Sampling and Quantization Often the domain and the range of an original signal x(t) are modeled as continuous. That is, the time (or spatial) coordinate t is allowed to take on arbitrary real values (perhaps over some interval) and the value x(t) of the signal itself is allowed to take on arbitrary real values (again perhaps within some interval). As mentioned previously in Chapter ...

- 5.2 The sampling theorem, Digital signal processing, By ... - Jobilize — The sampling theorem Digital transmission of information and digital signal processing all require signals to first be "acquired" by acomputer.

- PDF Sampling and Quantization - Princeton University — In this chapter, we consider some of the fundamental issues and techniques in converting between analog and digital signals. For sampling, three fun-damental issues are (i) How are the discrete-time samples obtained from the continuous-time signal?; (ii) How can we reconstruct a continuous-time signal from a discrete set of samples?; and (iii) Under what conditions can we recover the ...

- 2. Digital sampling — Digital Signals Theory - Brian McFee — 2. Digital sampling In the previous chapter, signals were assumed to exist in continuous time. A signal was represented as x (t), where t could be any continuous value representing time: 0, 3, − 1 / 12, 2,... are all valid values for t. Digital computers, the kind we write programs for, cannot work directly with continuous signals.

- PDF UQ Robotics Teaching — Sampling Theory 2019 School of Information Technology and Electrical Engineering at The University of Queensland

5.3 Advanced Topics for Further Study

- PDF Sampling and Quantization - Princeton University — 5.3 The Sampling Theorem In this section we discuss the celebrated Sampling Theorem, also called the Shannon Sampling Theorem or the Shannon-Whitaker-Kotelnikov Sampling Theorem, after the researchers who discovered the result. This result gives conditions under which a signal can be exactly reconstructed from its samples.

- Unit 5 | - Goseeko — 5.1 The sampling theorem and its implications- spectra of sampled signals Sampling theorem states that for a continuous form of a time-variant signal it can be represented in the discrete form of a signal with help of samples and the sampled (discrete) signal can be recovered to original form when the sampling signal frequency Fs having the greater frequency value than or equal to the input ...

- 5.3: The Sampling Theorem - Engineering LibreTexts — The Sampling Theorem Digital transmission of information and digital signal processing all require signals to first be "acquired" by a computer.

- PDF Advances in Sampling Theory and Techniques - SPIE — Researchers, engineers, and students will benefit from the most updated formulations of the sampling theory, as well as practical algorithms of signal and image sampling with sampling rates close to the theoretical minimum and interpolation-error-free methods of signal/image resampling, geometrical transformations, differentiation, and integration.

- 5.3.1. The Sampling Theorem — Signal Processing 1.1 documentation — The sampling theorem states that in case the sampling frequency is more than two times larger than the highest frequency in the signal, the CT signal x(t) x (t) can be exactly reconstructed from its DT samples x[n] x [n].

- Advanced Topics in Shannon Sampling and Interpolation Theory — Advanced Topics in Shannon Sampling and Interpolation Theory is the second volume of a textbook on signal analysis solely devoted to the topic of sampling and restoration of continuous time signals and images.

- Chapter 5: Sampling and Quantization - studylib.net — Chapter 5 Sampling and Quantization Often the domain and the range of an original signal x(t) are modeled as continuous. That is, the time (or spatial) coordinate t is allowed to take on arbitrary real values (perhaps over some interval) and the value x(t) of the signal itself is allowed to take on arbitrary real values (again perhaps within some interval). As mentioned previously in Chapter ...

- PDF Sampling - students.aiu.edu — Further, with a sampling procedure in which a point location is chosen at random in a study region and sample units are then centered around the selected points, the sample units can potentially overlap, and hence the number of units in the population from which the sample is selected is not ï¬nite.

- PDF Microsoft Word - EDCh 5 sampling theory.doc - Analog — In the example shown, a good sample-and-hold could acquire the signal in 2 μs, allowing a sampling frequency of 100 kSPS, and the capability of processing input frequencies up to 50 kSPS. A complete discussion of the SHA function including these specifications follows later in this chapter.

- PDF The Sampling Theorem - New Jersey Institute of Technology — Shannon's Sampling Theory A BL signal which has no spectral components above the frequency fM is uniquely specified by its values at uniform intervals of 1/(2 fM) seconds.